I’m not entirely sure what to do with this.

It might never see the light of day, and yet it feels like something real — like I accidentally stumbled onto a missing variable in how we think about intelligence in the AI era.

It started as a question: If AI can make anyone sound brilliant, what does “intelligence” even mean anymore?

After weeks of trying to reconcile what I’ve seen in real life — teams outperforming themselves when augmented by AI, then collapsing the moment the model goes down — I landed on a simple idea: maybe we shouldn’t be measuring how smart people are, but how stable their intelligence is when the scaffolding is stripped away.

The Core of It

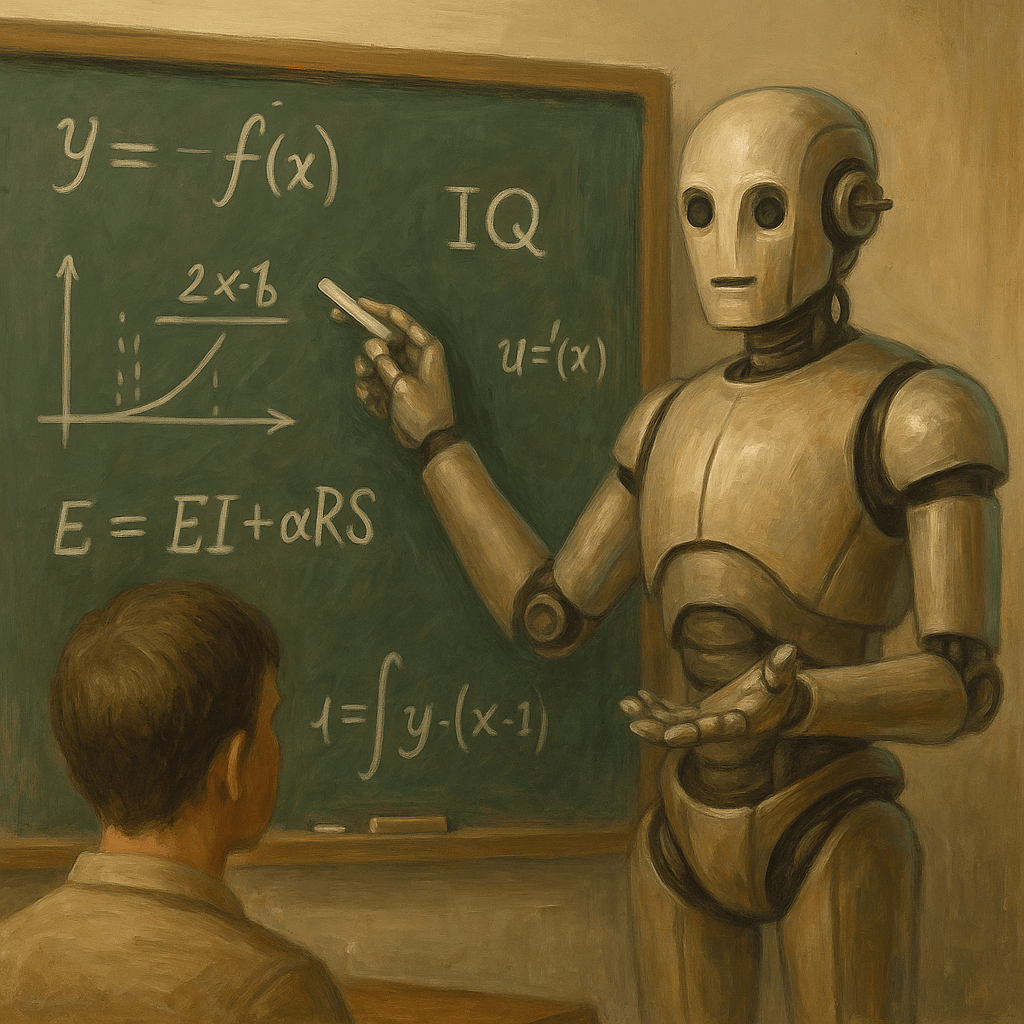

I call it the Efficiency–Fragility Intelligence (EFI) Model.

(caution geek stuff approaching)

It models performance (O) as a combination of your baseline ability (O_{base}) and how well you leverage support systems — tools, AI, or structure — represented by support level S:

O(S) = O_{base} \times [1 + \alpha R S]

In plain terms:

- Efficiency Intelligence (EI): How well you turn effort into output.

- Fragile Intelligence (FI): How much you depend on your supports to stay brilliant.

- α (Alpha): How effectively you use the support.

- R (Reliability): How trustworthy the support actually is.

- S (Support): How much support you’re getting at the moment.

What makes it fascinating is that it quantifies the difference between true intelligence and assisted performance.

It doesn’t just tell you who’s smart — it tells you who stays smart when the lights go out.

The Ironic Twist

Here’s where it gets meta.

The model itself — this whole thing — is built with an extremely high Fragile Intelligence.

It depends on tools like ChatGPT, Claude, and a swarm of notebooks and diagrams I’d never replicate from memory.

It’s literally a framework about dependency, born out of dependency.

I can’t even fully do the math without the AI that helped me derive it.

So yes, the irony is thick: a model about human fragility that can’t stand on its own legs without machine scaffolding.

And maybe that’s the point.

Why This Matters (Even If I Never Publish It)

If we can measure how dependent people (and organizations) have become on AI, we can start designing for resilience, not just efficiency.

It could reshape how we think about:

- Hiring: Who still performs when the systems fail?

- Education: Are we teaching people or just teaching them to use tools?

- Policy: How fragile are we as a society if the algorithms stop cooperating?

But for now, it’s just sitting here — this strange little intersection of psychology, math, and existential reflection — too real to ignore, too early to fit anywhere.

Maybe This Is the Whole Point

Maybe EFI isn’t just a formula — maybe it’s a mirror. We’re all running our own O(S) equation, trying to stay effective without becoming brittle. And if I’m being honest, mine’s running with a dangerously high S (support). So no, I don’t know if this will ever be peer-reviewed, or if anyone will ever cite it, or if I’ll have the courage to push it into academic circles.

But it’s science.

It’s legitimate.

And for now, that’s enough.

Leave a comment